I have tried to be aggressive about keeping the size of the app down as it has grown. I made a specific decision early on to avoid image assets and I went through quite some hassle to enable build features to keep the code small.

A while ago Google introduced a new way to package and distribute Android apps called Android App Bundle.

Previously when distributing an Android app, the developer would produce an Android Application Package (often called an APK, based on its standard file extension) that included everything needed to run on a variety of Android devices. This could include things like assets at different resolutions and code for different device architectures that were not all needed on any single device.

It was possible to produce different APKs that were more specialized but it was quite a bit more effort to setup a build pipeline to produce them and distribute them. With App Bundles, Google Play does this for you. An App Bundle contains the same wide array of content for different devices, but Google Play generates a device specific APK for each device that downloads it.

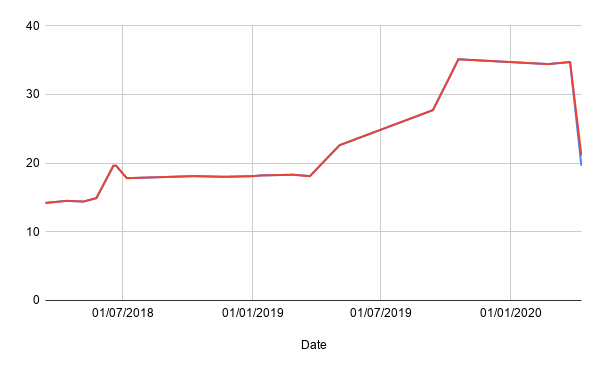

The result is quite staggering - much more than I expected. The graph here shows the size of the app in MB over time, with the conspicuous drop at the end when I switched to using App Bundles. (One interesting thing to note is that the line splits into two at the end. The size is now slightly variable based on device and the split represents the smallest and largest APKs possible).

0.20.1 includes updated logic for “fairness calculations”. This has already been pushed for the server so this is in fact available in all versions when running with cloud simulation enabled.

This came about mainly because of my efforts to try and generate interesting game settings. My idea was to sort all the setting combinations by fairness, generate new ones based on them (via some minimal genetic algorithm), and then repeat until some good results fall out.

Early attempts

My first attempts did not work well. The game simulation works by playing a few turns using the normal AI, and then seeing what it thinks its chances of winning from the current position are. I defined “fairness” as: take the chance of winning of the best player and the chance of winning of the worst player, and then calculate the ratio of the two. Here are some of the types of games it generated at first:

- Games with lots of players. Since nobody can win, it is perfectly fair.

- Games with a win line that is too big to reach.

- Games with a target score that is too big to reach.

Early fixes

I fixed some of it by doing some basic processing on settings before simulating. If the winline won’t fit, or there aren’t enough unique winlines to achieve the target score, I just reject it. Also, for now, I limited it to just two players.

The results were better, but basically just a more complex version of the above: games in which the AI did not manage to actually achieve a win in its simulations.

Refining the AI

One possible problem I identified was that the “chances of winning” calculation isn’t really about winning. It’s actually a kind of fuzzy number combining winning, and tying for first. In this case, tying for first is something I want to avoid. To fix this I parameterized the core AI engine, so the way it assigns a score to outcomes could be varied. There were initially two options:

- Original AI: Winning scores 1, tying for first scores 0.5, everything else scores 0

- “Win only” AI": Winning scores 1, everything else scores 0

This means I can now look for games that have a high fairness, and a reasonably high chance of actually winning a game.

First derived game mode

Final updated AI

Separate to my goals of generating interesting game modes, I also took this opportunity to actually improve the AI. For games with more than two players, the (normal) AI now takes into account relative positions. So, for example with three players the following outcomes all have unique scores:

- Finishing first, with no ties.

- Tying for first.

- Finishing second, with no ties.

- Tying for second.

- Finishing third.

This hopefully stops the situation in which multiplayer games ends up with several of the AIs “giving up” since they’ve determined they can’t possibly finish first.

It’s become a running gag that many commercial apps include release notes that just something like “Bug fixes and improvements”. Often it will be because there are lots of minor fixes that are too small and numerous to list. But just as often, there will be lots of technical changes that while important for various reasons, have no user facing effect. This post will attempt to explain some of the changes.

Xamarin Forms

Tic-tac-toe Collection depends greatly on a library called Xamarin Forms. This version updates it from version 3.4 to 4.4. There aren’t any major features in use yet, but there are a bunch of performance and stability improvements nonetheless.

Xamarin Essentials

This is a new dependency, and is partly the reason for the new Android 5 requirement. The key feature this brings is detection of light/dark mode, but it is also now used for launching external sites (for example: rating an app on iOS < 10.3 or accessing any web page on an Android device with Chrome custom tab support).

AndroidX

Android has long provided Android Support Library as a way of accessing new features on older versions of Android. AndroidX is conceptually just the next version of this. In practice, the implementation is very different and getting support for it in Xamarin has taken a while.

Removals

Several libraries have been removed.

Iconize by Jeremy Marcus is a library for using icon fonts in Xamarin Forms. I’ve used icon fonts since the very beginning and this library was a huge part of that. Enough of the functionality is now included directly in Xamarin Forms that the library is no longer needed.

Xam.Plugin.Connectivity and Xam.Plugin.Settings by James Montemagno superseded by Xamarin Essentials.

CarouselView.FormsPlugin by Alexander Reyes. For a long time, this was the best carousel view for Xamarin Forms, but it there is finally a version provided directly.

Acr.UserDialogs by Allan Ritchie provides system dialogs. After including Rg.Plugins.Popup for providing popup functionality, I switched to using that for simpler dialogs too for better style consistency.

Others

There are handful of other libraries that have been updated just on the principle that staying up-to-date is better.

The server component for Tic-tac-toe Collection has always been based on Azure Functions, a serverless compute platform by Microsoft. It is currently used to run the cloud AI and the game simulation used for fairness estimation, and does this by running the exact same code as the app. And, pretty much like the blog, deploying it was a slightly error-prone mostly manual process.

But like the blog, I’ve automated the deployment using an Azure pipeline.

The first update deployed with the new system is an improvement to the fairness estimation.

Estimating fairness

The fairness estimation is performed by simulating a game for a few moves, and then seeing how each of the AI rates its best possible next move. A big problem with this method is that the AI is probabilistic, so for a single simulation it is unlikely to be accurate (and some times it will end up being terrible). And, since the result for each combination of settings is cached, if an estimate is bad, it will stay bad.

With the update however, each subsequent request for a fairness estimation queues another one to run, and the results are averaged. So hopefully over time the estimation will actually become accurate.

Considering draws

Another change is draws are now considered. For example, previously, the estimation for standard tic-tac-toe gave the first player a score of 0.91 (on a scale of 0 - 1). Which more or less means a 91% chance of winning, assuming draws aren’t possible. Now, the first player gets a score of 0.68, which is closer to what your intuition would suggest your chances of winning tic-tac-toe is.

A while ago I switched the blog from Wordpress to Hugo. Since the switch updating the blog has had a few steps to it:

- Write the new content.

- Commit to the git repo.

- Run the

hugocommand to generate the site. - Upload to Azure Blob Storage.

- Purge the CDN.

I’ve been running those steps manually up until now. But then I discovered this guide to deploying Hugo using Azure Pipelines by Michael Brinkman.

I had always planned to automate it, but I didn’t expect there to be prebuilt steps for doing in the Azure Pipelines, notably Hugo by Giulio Vian and Purge Azure CDN Endpoint by Fabien Lavocat.

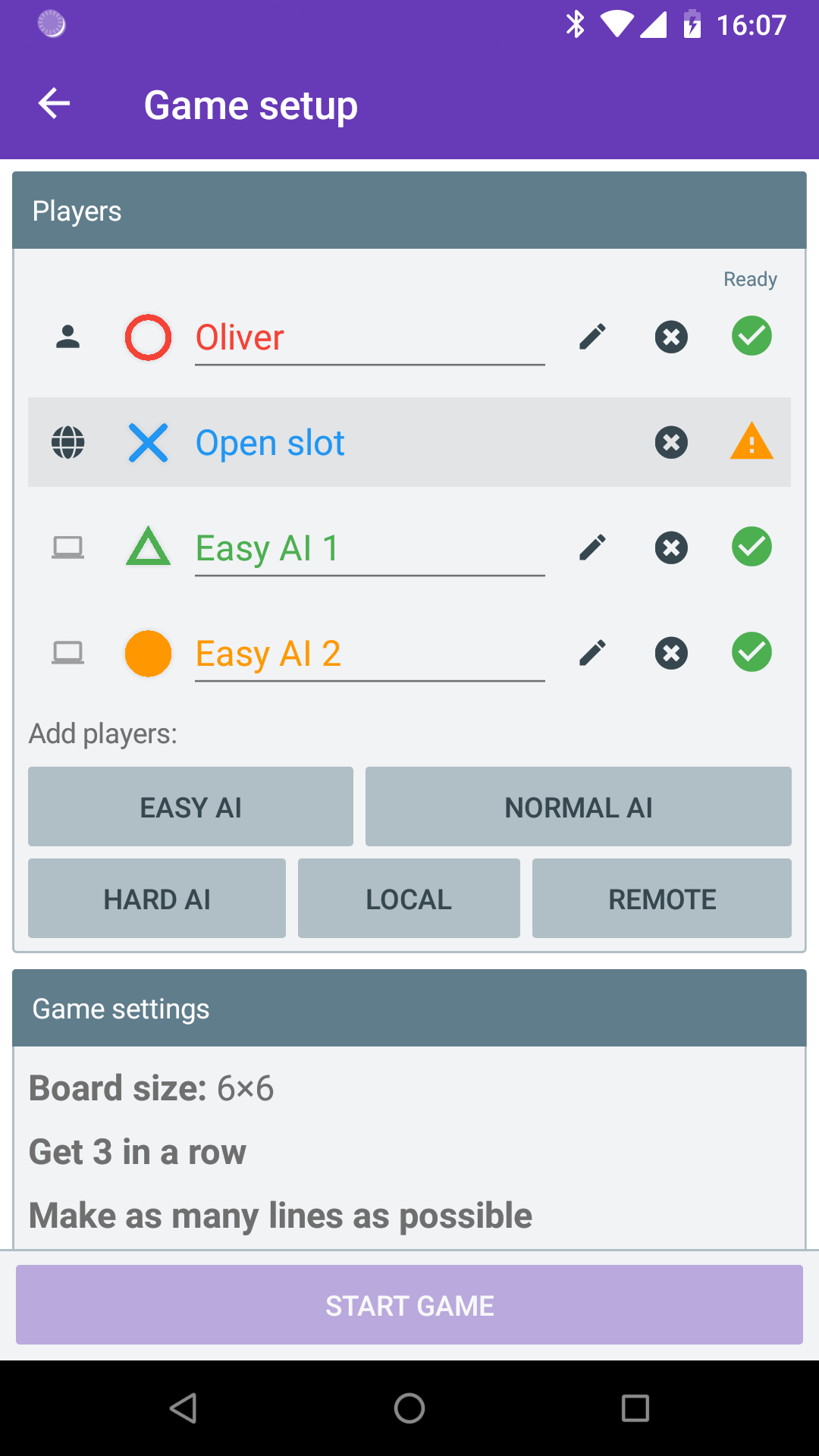

0.13 brings the first multi-device network play to Tic-tac-toe Collection, local network play. With this post I will explain some of the details about how it works and why I made certain decisions.

There are a few types of multi-device play I want to support, but I felt it was important to start with local network. The most important is that I strongly believe as much of the app should work offline as possible. In the long term, it should also continue to work if I stop maintaining any server infrastructure it uses. Another point is that local network play has fewer security considerations. I make a pretty big assumption that you trust who you are playing with, since they will likely be in the same room.

There are two quite separate parts to multi-device process, and these two parts will exist regardless of whether the game is local network or over the internet, private or match-made, or pretty much anything else. They are device discovery, and actual game data exchange.

For local network device discovery I chose UDP broadcast. The game sends a short message to every device on the network, while simultaneously listening for broadcasts from other devices. It’s possible for networks to block broadcasts (and is quite common for public WiFi hotspots to prevent devices from seeing each other at all) but for most people at home, this will work. No real data is exchanged at this point.

For the exchange of actual game data there are quite a few options, but for the first release I decided on HTTP polling. A big reason for this was simplicity, but also the knowledge that in the future much of the code would be the same when running over the internet.

The HTTP server is implemented using EmbedIO, a small HTTP server that supports .NET Standard. The client uses Refit, a library that generates REST clients from interface definitions and my default choice for HTTP clients.

The structure of the API and data exchanged during play is really simple. There is one GET endpoint for getting the current state and another POST endpoint for sending a change. Additionally, ETags are used to avoid sending state data if nothing has changed.

Overall, the technical side of implementing multiplayer was straightforward. The hardest part by far was designing the updated UI for showing player details, both on the player setup screen and in game. I think there are still improvements that can be made.

0.12.4 includes a significant rewrite of large parts of the core game engine. Bits that I didn’t like had started to build up, and some plans I have for new game modes looked to be quite difficult to implement because of decisions I made earlier that seemed like a good idea at the time.

The current state of the engine is now much better, however the process does make me nervous. The changes affect not just how the engine plays the game, but also how data is saved and loaded. And people generally don’t like their save games being corrupted.

So as part of the work for this version I also wrote a tool (that I have been intending to write for a while) that checks for save game compatibility. The idea is the tool creates games with various combinations of settings, plays them through (with the easy AI) and saves the result. As part of the normal automated tests I run with each version, these save games are then loaded, the moves replayed, and the result compared with the previous result. Any discrepancies are failures that I can investigate.

Importantly, the initial set of games is based on running the tool before the big engine changes. Unfortunately (or perhaps fortunately) it discovered a bug straight away. Loading a game with “free turn” set to true (i.e. any of the Chain game modes) would not always pick up the “free turn” setting (this was fixed in 0.12.3).

So the plan is every time I add a new game mode (or change the engine significantly), I generate a new set of save files, and during every release the current set of save files is checked for compatibility. Hopefully this should keep everyone happily saving and loading.

0.9.2 doesn’t contain any new features, but does contain a lot of optimizations. Here is more detail on some of them.

Tic-tac-toe Collection is built using Xamarin Forms. This post assumes familiarity with Xamarin, Xamarin Forms and .NET. I do have another post planned with more detail on these steps for those less familiar.

Firstly, I enabled Proguard, a tool for stripping out unused code at the Java byte code level. Doing this for Xamarin is a bit weird because the version of Proguard you get by default does not work if you are targeting Android 7.0 or newer. A Nuget package is recommended but that didn’t work for me either due to some path issues. So I just extracted the jar and specified the path manually.

I grabbed an example Proguard config file off the internet, added some extra bits I found elsewhere for Google Ads, and tried to run it. I worked through the errors about missing things, adding to the config file as I went. On the whole, actually straightforward.

The next step was to enable “link all” in the Xamarin linker settings, to remove unused code at the .NET level. This time however, instead of immediately excluding things from linking that caused problems, I realised I could actually reduce the number of things only referenced using reflection.

By default Xamarin Forms uses reflection heavily when data binding. This can be largely avoided by using compiled bindings. To use it you need to do two things: firstly, enable XAML compilation (which you should have been using already); secondly, add appropriate `x:DataType` properties to your XAML.

This underused feature allows the compiler to generate strongly typed bindings based on the type you specify.

After doing that, the only things left that the linker was breaking was types used in JSON serialization, which were easily fixed.

The final thing I did was to replace Autofac. Autofac was the first IoC container I was introduced to and has been my default choice, pretty much without any thought. However I came across this chart of IoC performance and realised I was not using any of the clever features that justified Autofac and so, switched to LightInject.

Enabling Proguard and the linker cut the APK size down from about 32MB to 22MB. The improvements from the compiled bindings and Autofac changes are harder to measure.

The most notable improvement is cutting in half the time to go from the main screen to the game screen directly, and all of the steps will have helped.